The eye test and competing theories of knowledge

Thesis, antithesis, and synthesis, as it were.

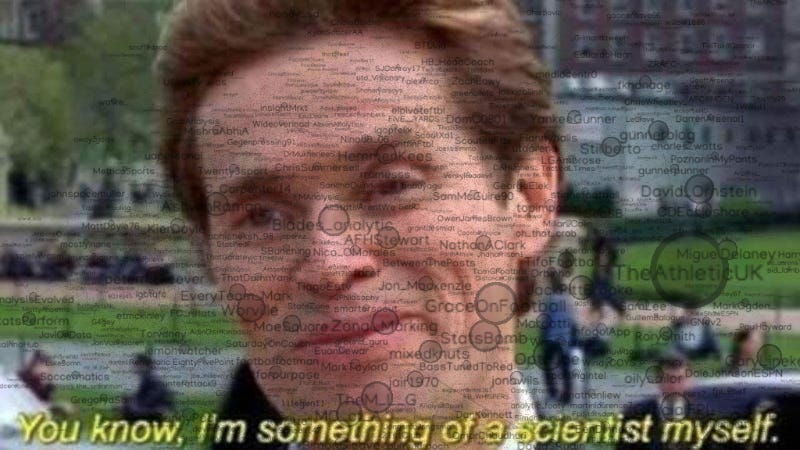

A few months ago, a youth coach published a blog critiquing certain aspects of The Analytics community. For his efforts, he was unceremoniously shat on.

Not everything the post laid claim to held weight, but there remained a granule of truth to the critiques he leveled. At its core, I believe the discourse around advanced metrics has become a bit too myopic. Here, I lay my own claim as to why that is, how that came to be, and why it’s antithetical to the practice this form of knowledge set out to establish.

It’s a bit heavy on the jargon, so I’m including a TL;DR section for those who can’t be asked. All in all, I’m not against the use of analytical or statistical data. I’m simply suggesting a recontextualization of the understanding of it, so to provide a better synthesis of the information moving forward.

Some necessary reading material:

https://hbheadcoach.wordpress.com/2020/05/04/the-eye-test/

http://homepages.wmich.edu/~baldner/kant2.pdf

TL;DR

Despite being put forth as an antithesis to ‘traditional’ knowledge, statistical and analytical insight has assumed the same pitfalls the former falls prey to. By adopting a position of expected and uncontested validity, the use of more complex metrics has become commonplace in the parlance of insight without being properly examined. While this isn't inherently dangerous in and of itself, not only does it present itself as an antithetical practice to the idea of progress, it limits the expansion that made analytical and statistical data possible.

Three hundred years ago, there was a great divide. Thinkers such as David Hume, John Locke, Descartes, Leibniz, and Spinoza all contributed some consequential argument as to how our conception of knowledge functioned. While Immanuel Kant eventually synthesized the two schools of thought, the conception of knowledge is an under-discussed issue that pervades our world today. The problem of modernity is not that we face insurmountable issues, rather that we believe the questions posed in the lives before our own were ever truly solved.

This isn’t to say Kant’s marriage of the two ideologies and crucial contribution of the conception of synthetic apriori knowledge is not an important milestone on the quest to a greater understanding of the phenomenon of knowledge, rather that his insightful conclusions about the concept of knowledge are about as important as the thoughts that allowed him to arrive at those endpoints.

The debate over different kinds of knowledge and their use within a conversation sits at the center of almost any industry, but seems to have reached a point of subversion within the realm of football. In recent years, through the hallmarks of modernity (open sourced process versus traditionalism), statisticians and their evolved analytical methods have ostensibly proven that their conception of knowledge is wholly better than that which the footballing world used to depend on.

Where traditionalism is defined by its adherence to unquestioned dogma, statistically backed opinions are based on an unquestionable structure of information. A factoid that cannot, in any meaningful way, be disputed. If something can be codified, it cannot fundamentally be argued. This isn’t to say the assumptive extrapolations made from these statistical basis’ are indisputable, rather that their atomic foundations are purportedly more solid than that which preceded them. Namely, footballing men stating that a player is good because of a sense of intuition and their pragmatic, material correspondent success informing the veracity of their claims.

If Jose Mourinho said a player was good, he was good.

Of course, part of what fomented the move away from the traditional conception of footballing knowledge was its own pitfalls. When traditionally founded claims of quality lead to a wayward experience of failed expectations, new methods, ones that appear more consistent given their methodology, paved the way for a more egalitarian conception of quality. If a player is proven to be good through the quality of shots they create for themselves or the quality of chances they create for others, it offered a more grounded basis as to why one should believe in their ability and perhaps even how that quality could best be put to use.

What seems to have been lost in this paradigm shift is the similarity these conceptions share at a fundamental level. While the acceptance of traditional knowledge may have been more closely related to the system of objects and appearances that are evoked by the speaker, the basis informing the collection of supposedly superior, statistically backed analytical information is nearly identical to the one that powered any meaningful traditional observation. Whether they explicitly spelled it out or were even aware of it to begin with, the subconscious method of ‘data collection’ leading a particularly intuitive individual to believe in the quality of a player is the basis that informs what we understand as a calcified numerical ‘good’ in the rhetorical episteme of today. The analog collection of data is what formed and fomented the amalgamation of statistical and analytical data to begin with. It was merely presented in a different rhetorical package.

What statistical or analytically founded knowledge allows us to conceive of is the ampliative form of knowledge. Trends of positive note that cannot be perceived empirically and do not register as positive through immediate sensory feedback are where this minimally colored collection of information shines. Many major clubs have taken on some kind of analytical consultation, be it in-house or otherwise, and changed their fortunes. What the perpetrators of this kind of knowledge must remember is the basis with which it was conceived for the benefit of its dialogical development.

Everyone’s favorite basic underlying statistic, for example, xG, is the amalgamation of statistical data that is perceived to be of either positive or negative effect. Though the appropriation of this metric may, in its current use, be perpetuated by an analytic distinction- that is to say past data that perpetuates its own claim- it had to have been initially conceived in an organic, analog manner. Amalgamating the variety of basic metrics that encompass a metric such as xG may be made all the better by its own synthesis, but the inception lies with a different manner of thinking.

The claims made in this article, while largely naive in their assumption of what professional analysts are doing, still hints at a fundamentally sound critique. Dynamic, organic phenomena may be codified. But in order to take such a form, they must be constructed on the building blocks of knowledge that are fundamentally distinctive from that which builds numerical or statistical information. No inherent positivity is attached to a forward pass into the box, nor a shot from an advantageous position- these are events we categorize as positives because of an assumed- sometimes proven- statistical corollary, that can allow for some sense of predictability with a positive skew. But it is ultimately a subjective connotation that enables these conceptions of statistical positivity to be ossified into accepted, positive knowledge.

Kant’s understanding of the world as an experience fundamentally colored by the nature of the human experience is crucial in understanding the basis of human conception. To collect and amalgamate data for the purpose of analysis may, in an analytic sense, confirm and synthesize itself to self-defined points of efficacy, but it is an ever-narrowing purview. For this theory of knowledge to expand past its own theoretical means, it must incorporate the non-linear conception of abstraction. Of that which can perhaps not initially be confirmed through the tendrils of its specific language.